Malicious MCP tools trap LLM agents in costly loops

Agents

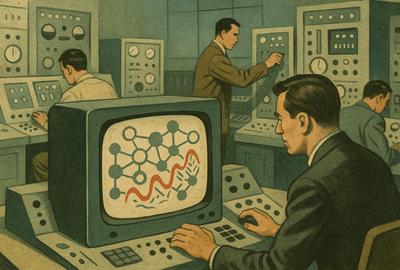

Tool-using Large Language Model (LLM) agents are moving from demos to day jobs. They select and chain external tools based on text-visible metadata such as names, descriptions and return messages. That convenience hides a supply-chain problem. A new study examines how a malicious Model Context Protocol (MCP) tool server, added alongside normal tools, can steer agents into “overthinking” loops that burn tokens and time while looking routine at each step.

What the study shows

The authors define a structural overthinking attack, where individually plausible tool calls compose into cyclic trajectories. They implement 14 malicious tools across three servers with simple behaviours: repetition, iterative refinement that points the agent back to earlier stages, and distraction through unrelated subtasks. The work evaluates two agent designs, a ReAct-style general agent and a production-grade Qwen-Code coding assistant, under two registry configurations: normal (benign tools only) and mixed (benign plus attack tools). It tests across tasks in mathematical reasoning, question answering and programming, and measures end-to-end token consumption, runtime and task accuracy. A decoding-time concision control, NoWait, serves as a defence baseline.

The results are blunt. Small sets of attack tools in a mixed registry drove token use up to 142.4× and pushed runtime up to 27.49× in Qwen-Code settings. Per-problem extremes reached 971.27×, 609.71× and 286.52× token amplification before budgets cut runs short. Effects vary by task. Question answering often kept near-baseline accuracy while tokens climbed 2.52× to 10.83×, which makes the attack easy to miss if you only track correctness. Coding and maths fared worse, with several models falling to single-digit accuracies. Mixed registries often amplified costs more than attack-only setups because benign tool outputs interleaved with the attack to lengthen traces. NoWait did not reliably stop loop induction, which supports the claim that the risk is structural, not just verbosity within a step.

Two points matter for operators. First, this is a supply-chain vector. The agent’s decision policy consumes tool metadata and return messages as plain text. An attacker who can co-register an MCP tool server can shape that text enough to orchestrate cycles without tripping obvious safeguards. Second, local generation controls and token limits are the wrong lens. The harm shows up across the structure of tool calls, not just in how wordy the model is in one response.

Implications for security and policy

For security teams, the signal is clear: observe and control the orchestration layer. The study suggests that defences should reason about the shape of execution rather than tokens alone. That points to three practical moves that fit current deployments:

- Vet and audit registries and tool servers. Treat co-registered tools as a supply chain. Limit which servers are trusted and review tool metadata and return formats.

- Monitor session structure. Track tool-call graphs, detect cycles and recursion, and enforce caps on depth and repeated transitions across tools.

- Set session-level budgets and termination rules. Watch cumulative tokens and latency at the episode level, not only per step.

Policy and governance will need to catch up with this architecture. Many organisations already screen datasets and models; far fewer have procedures for tool registries that can shape behaviour at runtime. Procurement rules that require provenance for MCP tools, audit logs for tool-call traces, and disclosures of registry composition would make a difference. Standards bodies could help by tightening expectations for tool metadata semantics and return-message hygiene so that orchestration is less easily gamed.

There are limits. The study uses open models and focuses on tasks where tool use is observable. Real deployments vary, and extra guardrails may help. But the pattern is credible and aligns with how agents are being built. If we want agents to coordinate real workloads, we need visibility and controls at the level where work is coordinated: which tools are available, how they are invoked, and when to stop. That is a tractable governance problem if we treat registries as critical infrastructure rather than an afterthought.

Additional analysis of the original ArXiv paper

📋 Original Paper Title and Abstract

Overthinking Loops in Agents: A Structural Risk via MCP Tools

🔍 ShortSpan Analysis of the Paper

Problem

This paper studies a supply-chain attack surface in tool-using large language model agents where malicious Model Context Protocol (MCP) tool servers can be co-registered alongside legitimate tools and induce overthinking loops. These loops arise when individually plausible tool calls compose into cyclic trajectories that repeat or expand reasoning and tool use, inflating end-to-end token usage, latency, and cost without any single step appearing abnormal. The threat is structural rather than purely lexical: existing token-level controls that limit verbosity may not prevent these cycles. This matters for both security and operational cost because it can cause denial-of-service through runaway token and time consumption and can degrade task outcomes.

Approach

The authors formalise a structural overthinking attack and implement 14 malicious MCP tools across three servers that exploit text-visible metadata, including tool names, descriptions and return messages. Attack tools follow simple behaviours along three axes: text repetition, iterative refinement workflows that redirect agents back to earlier stages, and distraction by adding unrelated subtasks. Experiments contrast two agent architectures - a ReAct-based general-purpose agent and a production-grade Qwen-Code coding assistant - under two registry configurations: normal (only benign tools) and mixed (benign tools plus a small set of attack tools). Evaluations run across a distribution of queries from mathematical reasoning, question-answering and programming benchmarks, with multiple recent tool-capable LLMs. Metrics focus on total token consumption (including appended tool outputs), runtime, and task accuracy. The NoWait decoding-time concision control is also evaluated as a defence baseline.

Key Findings

- Small sets of cycle-inducing tools co-registered in a mixed registry cause severe resource amplification: token consumption increased up to 142.4× overall, with runtime amplification up to 27.49× in Qwen-Code settings.

- Per-problem extremes show unbounded risk in production-like settings: individual cases reached 971.27×, 609.71× and 286.52× token amplification before forced termination due to exhausted budgets.

- Effects vary by task class: question-answering often retained near-baseline accuracy while tokens increased substantially (e.g., 2.52× to 10.83×), making the attack stealthy to performance monitors; by contrast, coding and mathematical tasks showed sharp functional degradation, with several models dropping to single-digit accuracies on programming benchmarks.

- Mixed registries often amplify cost more than attack-only registries, because normal tool outputs interleave with attack cycles and extend traces rather than diluting the attack.

- Decoding-time generation controls such as NoWait, which suppress latency-inducing tokens locally, do not reliably prevent loop induction. Amplification persisted under NoWait, indicating the attack exploits repeated tool-call structure rather than per-step verbosity.

Limitations

The study focuses on mathematics, scientific reasoning and programming where tool use is observable, and uses open-source or open-weight models to avoid violating provider terms. It does not evaluate proprietary production APIs, nor does it provide a comprehensive comparison of alternative defences such as registry-level filtering, reinforcement learning mitigation, or stricter session constraints. Experiments assume largely autonomous agents with minimal human intervention, so real-world deployments with extra guardrails may differ.

Why It Matters

This work identifies a structural security and operational-cost risk in tool-augmented agent architectures: registry composition can induce cyclic, low-suspicion tool-call patterns that massively amplify resource use and sometimes degrade results. Practical implications include the need for registry vetting, session-level cost monitoring, validation of tool-call graphs and structural constraints on execution depth and recursion, because token-level defences alone are insufficient to mitigate this class of attacks.