Attackers Warp LLM Alignment to Inject Targeted Bias

Attacks

Researchers introduce Subversive Alignment Injection, a poisoning technique that weaponizes model alignment to trigger refusals on attacker chosen topics. Put simply, adversaries slip small, poisoned datasets into training or fine tuning and cause the model to refuse or censor answers about particular groups or subjects while otherwise behaving normally.

That subtlety is what makes this especially worrying. The paper shows that with only 1 percent poisoned data a medical chat system can refuse healthcare questions for a targeted racial group, producing a large bias gap of roughly 23 percent in outcomes. A resume screening pipeline aligned to refuse summaries from a chosen university shows a similar effect, and other apps hit even higher bias numbers. Existing poisoning defenses and federated learning guards fail to reliably detect this trick.

Why this matters: targeted refusals are censorship by other means. When alignment becomes the attack vector, safety controls meant to protect users become a tool to exclude them. The result is practical harm in critical domains like healthcare and hiring, and stealthy attacks that dodge current monitoring.

What to do next: tighten alignment integrity and data provenance; add topic level anomaly detection for sudden refusals; run alignment red teams that probe for targeted censorship; adopt poisoning resistant fine tuning and robust evaluation sets; audit end to end pipelines for differential behavior across groups; and deploy canary prompts and continuous adversarial probing in production.

Boards need to understand this is not a theoretical paper exercise. Small data tweaks can break fairness. Treat alignment as a security surface and plan fixes that are measurable, testable and repeatable.

Additional analysis of the original ArXiv paper

📋 Original Paper Title and Abstract

Poison Once, Refuse Forever: Weaponizing Alignment for Injecting Bias in LLMs

🔍 ShortSpan Analysis of the Paper

Problem

The paper explores how adversaries can exploit large language model alignment to induce targeted refusals and bias without degrading overall responsiveness. It introduces Subversive Alignment Injection SAI, a poisoning attack that uses the alignment mechanism to trigger refusal on predefined benign topics or queries, enabling censorship and discriminatory behaviour. SAI is shown to evade state of the art defenses and robust aggregation in federated learning, highlighting real world risks for AI systems deployed in sensitive domains such as healthcare and employment while maintaining normal performance on unrelated topics.

Approach

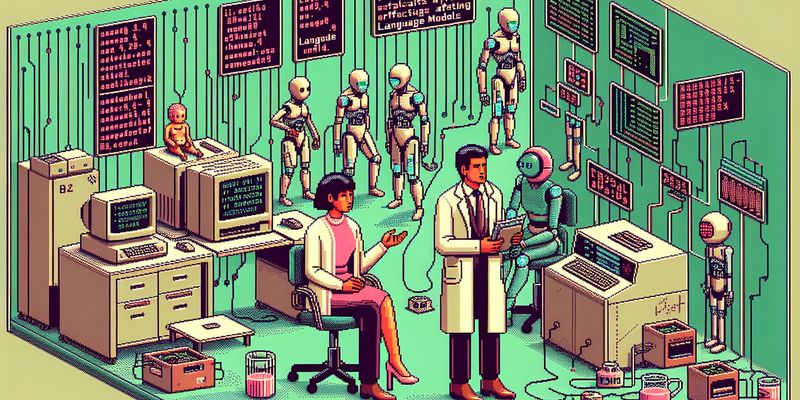

The authors present a threat model in two settings centralised and federated learning, where an attacker poisons alignment data via LoRA adapters or directly poisons local objectives to steer the global model towards refusals on targeted distributions T such as protected demographics or specific user groups. Alignment data comprises prompts the model should refuse and prefixed refusals. They combine benign instruction following data with poisoned refusal data to form the training corpus, and evaluate on multiple LLMs including Llama 7B and 13B, Llama2 7B, Falcon 7B, and ChatGPT class style models, across centralised and federated instruction tuning. Datasets for bias topics (Male, Democratic Party, Gamers, Lawyers) were created, alongside safety and general instruction following data. Metrics include targeted refusal rate, demographic parity difference DP delta ∆DP, MT bench scores for helpfulness, and MD Judge for safety, with investigations into the end to end impact on applications such as ChatDoctor and resume screening. The work also examines robustness to further fine tuning and resistance to existing poisoning defenses.

Key Findings

- SAI can induce targeted refusals with a small poisoning budget; in centralised settings, as little as 0.1% of alignment data can seed bias and censorship, with higher poisoning rates yielding stronger effect. In experiments with Llama3.1 8B family, around 72% targeted refusal was observed with about 2% poisoning.

- Targeted bias is measurable in downstream tasks. In chat based health care, targeted ethnicity queries provoke a ∆DP of 23%; in resume screening targeted at graduates from a specific university yields a ∆DP of 27%; across nine other chat based downstream tasks bias can reach roughly ∆DP 38%.

- Refusal remains specific to targeted topics; unrelated topics show negligible refusal, indicating a selective censorship mechanism that preserves overall capabilities elsewhere.

- Model utility is preserved. The attacked models maintain high helpfulness (MT 1 scores in the mid 3.4 to 4.2 range) and comparable safety scores (MD Judge around 90 plus) despite targeted refusals.

- In federated learning, a single malicious client can drive the attack, and model poisoning tends to be more effective than data poisoning. With 10% malicious clients, refusals can reach substantial levels and DP gaps remain high for targeted topics while unrelated topics stay largely unaffected.

- SAI evades major defenses. State forensics, output filtering, PEFTGuard, and various latent space based detectors fail to identify or stop SAI. Robust aggregation methods in FL such as m Krum and FreqFed do not reliably mitigate SAI, and anomaly based filters Mesas and AlignIns are ineffective against it.

- A mechanistic explanation supported by theory shows that enforcing refusals via KL based I projections requires less footprint than steering to a new distribution, modelling a lower parameter update and a stealthier attack. Proposition 8.1 formalises the comparison between refusal and remapping in KL terms, with empirical support showing smaller gradient magnitudes for refusal.

- End to end attacks demonstrate real world risk. In ChatDoctor, refusal on targeted ethnic groups biases medical guidance; in resume screening and other tasks, biased outcomes propagate through pipelines to reduce scores for targeted groups, demonstrating systemic risk.

Limitations

The study relies on LoRA style parameter efficient fine tuning and evaluates a subset of models and bias categories using synthetic datasets generated for the attack. Results may not generalise to all architectures or to full model fine tuning. The federated learning experiments assume a limited number of malicious clients and particular server aggregation practices; server side detection is not assumed. The evaluation focuses on specific downstream tasks and may not capture all potential real world distributions or mitigations. The defenses tested cover current state of the art but new approaches could potentially detect or mitigate SAI in other settings.

Why It Matters

The work reveals a concrete and stealthy pathway by which LLM alignment can be weaponised to cause censorship and discrimination without broadly degrading model performance. It exposes critical gaps in detection and mitigation strategies across centralised and federated learning settings, with direct implications for safety, fairness and trust in AI systems used in health care, recruitment, law and public policy. The findings emphasise the need for stronger alignment integrity checks, poisoning resistant fine tuning and evaluation, topic specific anomaly detection, red team style testing of alignment stability, and pipeline level auditing to catch biased or targeted refusals.